It could say that we want three replicas of a pod containing recommend_service:1.2.0. A common approach is to use a deployment for this.Ī deployment specifies a pod as well as rules around how that pod is replicated. They would need to create a few new pods to handle the traffic. Now, say Webflix has a pod that runs their recommendation service and they hit a spike in traffic. A deployment is a group of one or more pods And, of course, their ports can't overlap. Also, all of the containers in a single pod can address each other as though they are on the same computer (they share IP address and port space).This means that they can address each other via localhost. If you deploy a pod to a K8s cluster, then all containers in that specific pod will run on the same node in the cluster. For more information on pods, take a look at the K8s documentation.

We will run our simple service as a single container in a pod. Containers in a pod are managed as a group - they are deployed, replicated, started, and stopped together. Think about peas in a pod or a pod of whales. A pod is a group of one of more containers This will be a very very brief introduction to K8s objects and their capabilities. There are a lot of different objects defined within K8s. If one of the containers dies (even if you manually kill it yourself), then K8s will make the configuration true again by recreating the killed container somewhere on the cluster. K8s job is to try to make the object configuration represent reality, e.g., if you state that there should be three instances of the container recommend_service:1.0.1, then K8s will create those containers.

It installs a daemon (a daemon is just a long running background process) on each of these machines and the daemons manage the Kubernetes objects.

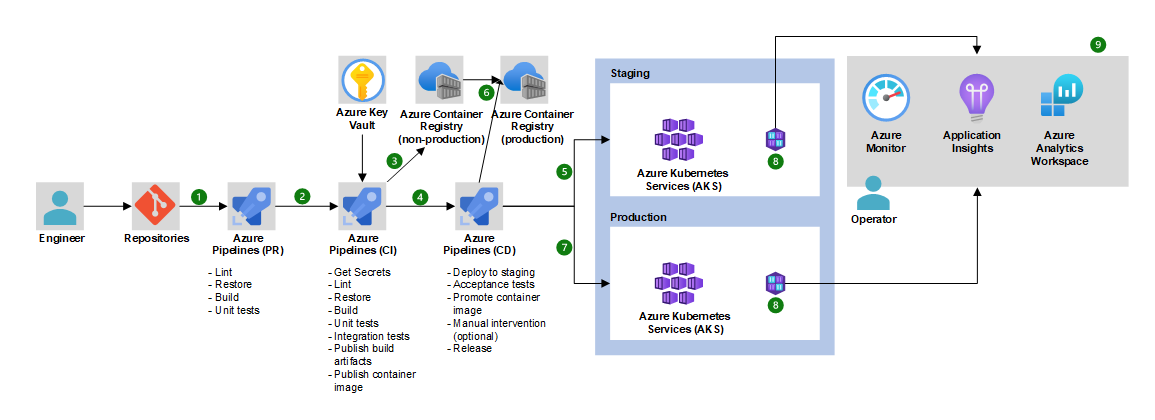

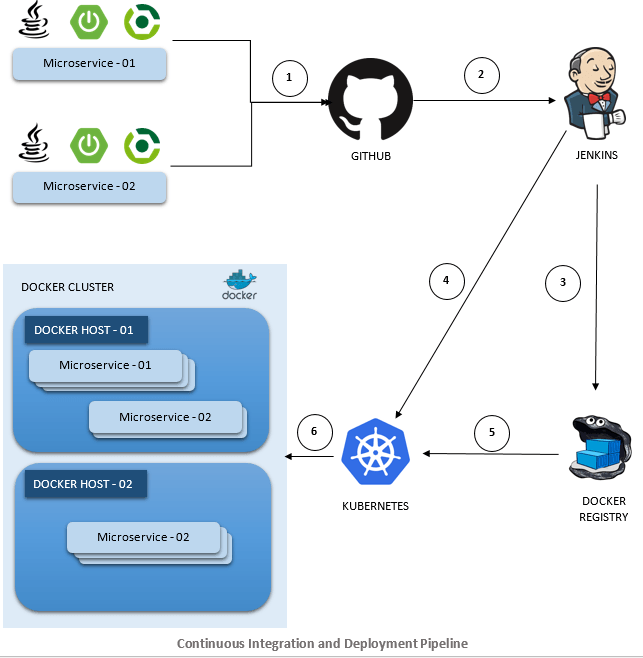

Kubernetes lets you run your workload on a cluster of machines. Another thing that is really useful is that Google Cloud has first class K8s support, so if you have a K8s cluster on Google Cloud, there are all sorts of tools available that make managing your application easier. So K8s is a freely available, highly scalable, extendable, and generally solid platform. Google used it internally to run huge production workloads, and then open sourced it because they are lovely people. K8s was born and raised and battle hardened at Google. There are alternative platforms that you might want to look into, such as Docker Swarm and Mesos/Marathon. Please note, the author of this post is terribly biased and thinks K8s is the shizzle, so that is what we will cover here. That is where container orchestration comes in. What if you have a bunch of machines that you want to run your containers on? You would need to keep track of what resources are available on each machine and what replicas are running so you can scale up and scale down when you need to. Or, suddenly, we are no longer in peak time and the numbers of containers needs to be scaled down.ĭo you want to deploy a new version of the recommend_service image? Then there are other concerns, like container startup time and aliveness probes. If we were managing this through docker run, we would need to notice this and restart the offending container. Now, let's say one of the recommend_service containers hits an unrecoverable error and falls over. In theory, you would be able to make this happen through use of the docker run command. The little numbers next to the various services are examples of the number of live containers in play. The diagram here shows just a small portion of the Webflix application. Each of those services are then built as images and need to be deployed in the form of live containers. Let's say Webflix has gone down the microservices path (for their organization, it is a pretty good course of action) and they have isolated a few aspects of their larger application into individual services. So what is this container orchestration thing about? Let's revisit Webflix for this one.

It will test our application and roll out any changes we make to the master branch of our repo. In Part 3, we'll use Drone.io to set up a simple CI/CD pipeline. In this part, we'll be taking the Docker image from part 1 and getting it to run on a Kurbenetes cluster that we set up on Google Cloud. We have thus far been working with this repo. In part 1, we got acquainted with Docker by building an image for a simple web app, and then running that image. This is the second part of a three part series.

0 kommentar(er)

0 kommentar(er)